Updated 14/07/2025

Overview of my notes for Deploying .NET Applications To Azure, the idea is to be able to follow them again later and apply to other deployments. Also see Errors When Deploying .NET Applications To Azure and Finding Container Apps Logs In Azure

For a guided tutorial I highly recommend Dometrain - From Zero to Hero: Deploying .NET Applications to Azure by Mohamad Lawand

Example container app ingress is https://demo-aca-bb8c7a39-dev.bluefield-f45026b3.australiaeast.azurecontainerapps.io/swagger/index.html, so demo-aca-bb8c7a39-dev comes from container_app.tf

Steps

Each heading shows the steps I followed:

Local Setup: CLI

- Install Terraform CLI and Azure CLI

- Check they available after installing

1 | terraform version ~ example v1.9.8 |

Local Setup: Sample code

- Clone DemoApi.

I built this simple CRUD(ish) app based on the Microsoft templates and adapted it to use EF Core and Postgres

- Run the database locally using docker compose, there are 2 dbs because the tutorial used MS SQL but PGSQL is cheaper and the one that I would use for actual deployments.

1 | docker compose up |

The default passwords are

1 | Type | User | Password | Port |

- Run the API, its .NET 8.0 so still has Swagger. The DB migrations should run automagically when the app starts.

1 | dotnet run |

Swagger is enabled for all environments so I can use the Swagger UI when deployed to Azure, normally this is only for local development or demo purposes.

The manual steps to run the migrations when testing were as follows

1 | dotnet tool install --global dotnet-ef ~ globally install the EF tooling |

- Check the app can locally insert and read data from the database

Create Azure Infrastructure With Terraform

You will need to know your tenant id, its just a GUID like 00000000-0000-0000-0000-000000000001

- Login to Azure, select and note the subscription

1 | az login --tenant 00000000-0000-0000-0000-000000000001 ~ logs you into the tenant, will open Azure portal login |

- Copy vars.tf from

iac_exampleintoiacand update some values

subscription_idwhich came from az login (this is just temp while creating the infra from local)sql_pass, exampledfb18358-5994-4470-b75a-109981a3fcf9, this is just a random GUIDsql_user, examplesqldemo-admingroup_key, examplebb8c7a39(Some Azure resources need to be unique, while testing its helpful to use the start of a random GUID)

This CLI command was helpful when I was trying to understand the regions which I stored as location in vars.tf

1 | az account list-locations -o table ~ list of Azure regions |

- Copy setup.tf and check the

hashicorp/azurermprovider version is current, example4.14.0

- sets the cloud provider, API versions and account to connect to by subscription id

- see azurerm

- Run

terraform init

1 | terraform init ~ local initialization, and tracking with `.terraform.lock.hcl` to record the provider selections made |

- Copy the files listed below, one at a time, in order of the list, each time run

terraform planandterraform apply, this will create the infrastructure needed to run the application in Azure

1 | terraform plan ~ test run, compare local tf with azure, show the difference |

- resource-group.tf

- groups resources in Azure

- see resource-group

- container-registry.tf

- registry to store and manage docker images by version

- see container_registry

- log_analytics_workspace.tf

- logging and analytics

- see log_analytics_workspace

- container_app_environment.tf

- container_app.tf

- see container_app

- mssql.tf

- see mssql_server

- see mssql_database

- see mssql_firewall_rule

Note: azurerm_postgresql_flexible_server replaces the single server used March 28, 2025

- pgsql.tf (Single Server)

The end result seen from Azure showed the resources in my Resource Group:

You can also view the groups from the CLI

1 | az group list --output table ~ list resource groups |

You can also format the terraform files with terraform fmt myfile.tf

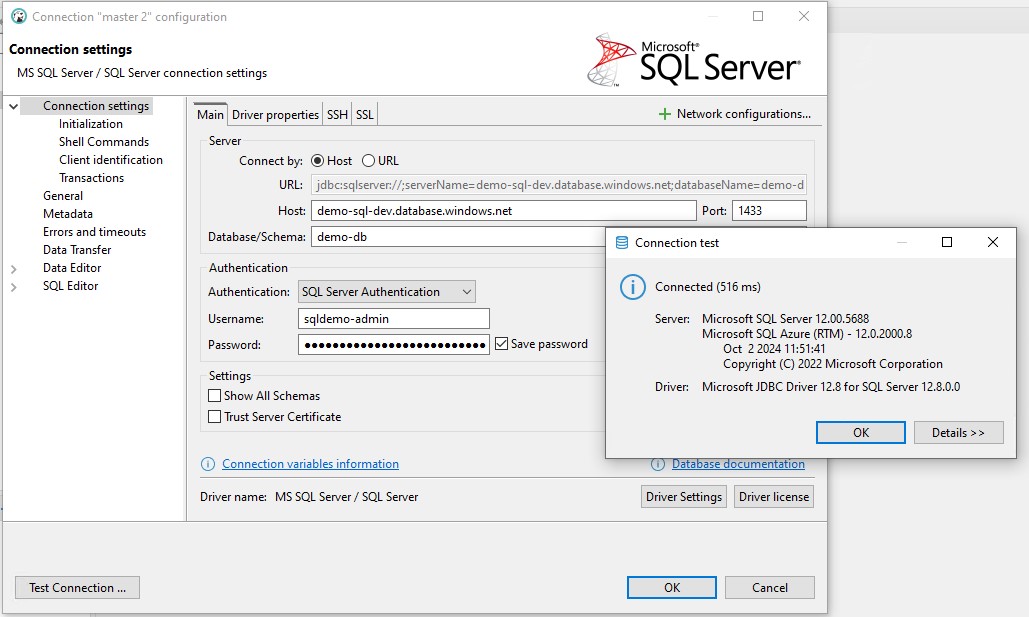

Test Connect To SQL

The username and DNS shown here was just for the demo, best practice is never to commit or share any secrets.

I then added my own IP address to the firewall by navigating to Resource Group (demo-rg-bb8c7a39-dev) -> selected the SQL server (demo-sql-bb8c7a39-dev) -> Security -> Networking -> Firewall rules -> Add your client IPv4 address (xxx.xxx.xxx.xxx) -> Azure automagically filled mine in -> Rule name Carl home IP -> Save. Your IP wont be static so will probs need an update later.

I then needed to get the server address for SQL in Azure by navigating to SQL server (demo-sql-bb8c7a39-dev), my server name was demo-sql-bb8c7a39-dev.database.windows.net, so the suffix comes from mssql.tf where we set the server name

Based on the sql_pass/user values in vars.tf I build then connected using DBeaver

Create Github Secrets and Variables

You set these in the Github UI under Github Settings -> Secrets and variables -> actions

- Secrets tab -> Repository secrets -> New repository secret

- Variables tab -> Repository variables -> New repository variable

For Azure Container Registry

To get these values navigate to Container Registry demoacrbb8c7a39dev -> Settings -> Access keys

1 | ${{ vars.ACR_SERVER }} ~ demoacrbb8c7a39dev.azurecr.io |

For Logging into Azure

Create a Service Principle (SP) and configure its access to Azure resources, here we copy the entire JSON object and save as AZ_CREDENTIALS github secret. This SP is used when logging into Azure to deploy both the Application and IAC.

1 | az ad sp create-for-rbac --name github-auth --role contributor --scopes /subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/demo-rg-bb8c7a39-dev --json-auth --output json |

1 | ${{ secrets.AZ_CREDENTIALS }} |

For IAC (RBAC)

Create a another Service Principle (SP), here we copy values out of the JSON and save as individual github secrets. This SP has no role or scopes and is used with the terraform deployment itself.

1 | az ad sp create-for-rbac --name iac-terraform-auth |

1 | ${{ secrets.ARM_CLIENT_ID }} ~ appId |

- https://learn.microsoft.com/en-us/cli/azure/ad/sp?view=azure-cli-latest#az-ad-sp-create-for-rbac

- https://registry.terraform.io/providers/hashicorp/azurerm/latest/docs/guides/service_principal_client_secret

For IAC (SQL Secrets)

These are the values manually added to to vars.tf

1 | ${{ secrets.TF_VAR_DB_PASSWORD }} ~ used with `Terraform plan` as var `sql_pass`, example dfb18358-5994-4470-b75a-109981a3fcf9 |

This will look something like Server=demo-sql-bb8c7a39-dev.database.windows.net,1433; Initial Catalog=demo_db; User=sqldemo-admin; Password=dfb18358-5994-4470-b75a-109981a3fcf9; Encrypt=False;

ENSURE its added wrapped in quotes

1 | ${{ secrets.AZ_MSSQL_DB_CONN }} ~ used when deploying, changes app settings ConnectionStrings__SqlServer |

Github Actions - Deploy Application

- Copy

\.github\examples\deploy-api.yamlto\.github\workflows\deploy-api.yaml - Update the config values, potentially this could be from a config but this is more intentional and these values wont change for the applications life span.

1 | demoacrdev (few instance values) -> demoacrbb8c7a39dev |

- Commit it and check it deploys, example https://github.com/carlpaton/deploying-dotnet-azure/actions/runs/12670053312

Overview of deploy-api.yaml

- JOB:

- build-and-push-image

- STEPS:

- Checkout repository

- Setup WebApi .NET

- Configure Azure Container Registry (ACR)

- Get commit SHA

- Build and push image to Azure Container Registry (ACR)

- STEPS:

- deploy-image-to-container-service

- STEPS

- Login to Azure

- Deploy to Azure Container Apps (ACA)

- STEPS

- build-and-push-image

Github Actions - Deploy IAC

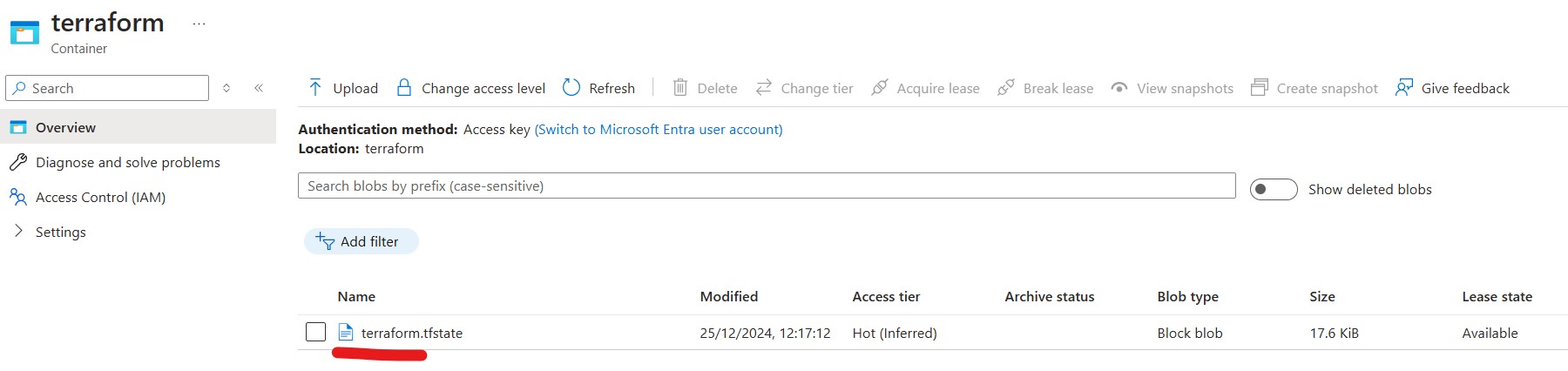

We need to migrate the TF state, terraform.tfstate and terraform.tfstate.backup from being stored locally to being stored in the cloud.

- Create a new resource group in Azure using the portal ->

Resource groups->Create

- Name ->

reference-rg - Region ->

(Asia Pacific) Australia East - Tags ->

environment=dev, source=azure-portal, group_key=bb8c7a39 Review and create->Create

- Add storage in the portal ->

reference-rg-> create -> search ->storage account-> selectStorage accountby Microsoft | Azure Service

- Plan ->

Storage account->Create - Storage account name ->

demoiacbb8c7a39 - Region ->

(Asia Pacific) Australia East - Primary service ->

Azure Blob Storage or Azure Data Lake Storage Gen 2 - Performance ->

Standard - Redundancy ->

Locally-redundant storage (LRS) Review and create->Create… this will take a minute or so to create and saysDeployment is in progressGo to resource->Data storage->Containers->+ Container(New Container)- Name ->

terraform-> Create

- Update setup.tf to include the backend

1 | terraform { |

- Migrate the terraform files from local to the new blob storage

- run

terraform plan, this should error withError: Backend initialization required, please run "terraform init"because we added a new backend - run

terraform init, this will askDo you want to copy existing state to the new backend?, typeyes

Locally terraform.tfstate should now be blank.

- Copy

\.github\examples\deploy-iac.yamlto\.github\workflows\deploy-iac.yaml, no changes needed, commit and push.

Overview of deploy-iac.yaml

- JOB:

- terraform-deploy

- STEPS:

- Checkout repository

- Login to Azure

- Terraform install

- Terraform initialization

- Terraform validate

- Terraform plan

- Terraform apply

- Notify failure

- STEPS:

- terraform-deploy

- Add a tag change, commit & push

1 | resource "azurerm_resource_group" "demo-rg" { |

If there are state lock issues run like Error: Error acquiring the state lock -> Error message: state blob is already locked then this can be unlocked from the CLI where 092ff1c9-467a-39a4-d0cf-b72ae52e5805 is the lock ID

1 | terraform force-unlock -force 092ff1c9-467a-39a4-d0cf-b72ae52e5805 |

This should now deploy IAC changes.

References

- https://www.npgsql.org/efcore/?tabs=onconfiguring

- https://datacenters.microsoft.com/globe/explore

- https://learn.microsoft.com/en-us/azure/azure-resource-manager/troubleshooting/common-deployment-errors

- https://learn.microsoft.com/en-us/azure/azure-resource-manager/troubleshooting/error-register-resource-provider

- https://learn.microsoft.com/en-us/cli/azure/install-azure-cli-windows?tabs=azure-cli

Technologies Used

- Azure Container Registry (Build store and manage container images)

- Azure Container Apps (Built on K8s, is Serverless & Fully managed by Azure)

- Azure SQL Database (Managed PaaS database engine)

- Azure Log Analytics Workspace (Observability and Analytics)

- Github Actions

- Terraform (Consistently build infrastructure using automation)

- .NET SDK

Costings

- Database

- Container App

- Container Registry

- Storage