Story

I want to solution design something that teaches me more about distributed computing, in the past I had a play with this useing a Raspberry Pi Cluster and a message passing interface library (mpiexec) which ran Python 2.

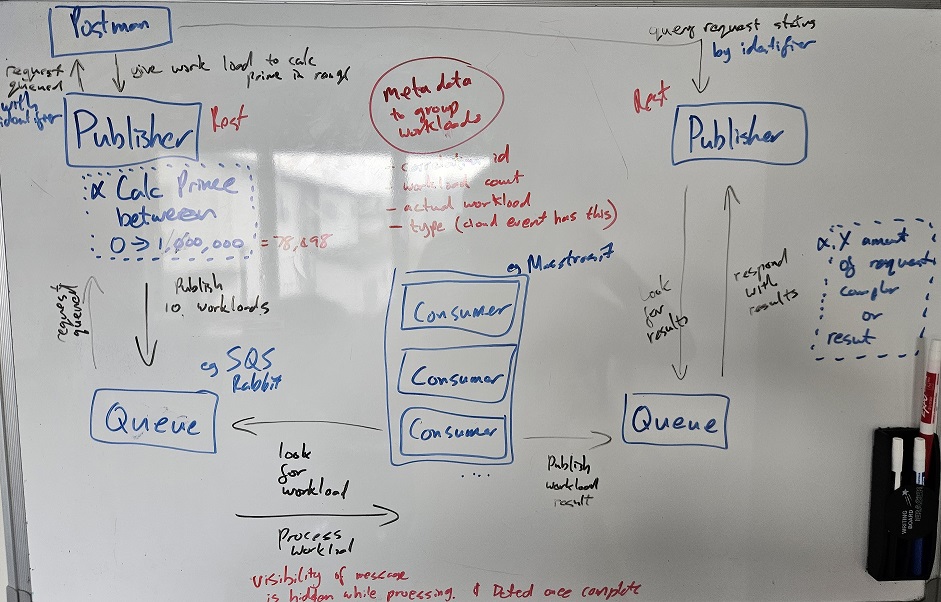

This was cool but I want to leverage more modern technologies like REST to be able to queue a request and then query its progress/result based on an identifier, this is esentially the Asynchronous Request-Reply pattern.

I want to use a queue service (perhaps RabbitMQ or AWS SQS) to decouple/loosely couple the components. Leaning towards RabbitMQ as although I understand the fundamentals of a queue, I have not used RabbitMQ itself. I think Cloud Events is a good starting point to structure the event but in reality MessagingContracts can just be classes shared between applications, these are just record DTOs describing the event.

Additionally I want to use C# for the heavy lifting as I have a good understanding of a BackgroundService and love the language. I want to try out masstransit as it claims to Easily build reliable distributed applications and deploy using RabbitMQ, Azure Service Bus, and Amazon SQS. I like all these words ❤️

Lastly I want to use Kubernetes on a Raspberry Pi Cluster running K3s as this can host my Publisher (the REST API), the Queues themself, my Consumers and any persistant storage mechanism.

Task

The story needs some problem to solve. For this I’ll just a calculate prime numbers in a given range which was solved in the past by Gary Explains using Python 2.

Example: Calculate the number of prime numbers between 0 and 1,000,000. Google Gemini suggested I use Prime Number Theorem instead of an itterative approach. This theorem tells us that the number of primes less than a certain value (x) is approximately equal to x divided by the natural logarithm of x (ln(x)). I understand some of those words but essentially thats 1,000,000 / ln(1,000,000) ≈ 78,498. So if anything I now have a value to balance back to.

Meh, but then how would I warm the CPUs in the Pi’s. I’ll do the itterative approach thank you very much as solving the math problem is not the focus here, its learning more about solution design and distributed computing. The solution could then be applied to other problems needing distributed compute power.

Also I do what I want ╭∩╮ (︶︿︶) ╭∩╮

Solutions

White Board

I wrote up a quick white board design, this will most likely change as I organise my thoughts but as a first cut this looks like it will work.

After drawing this up some concearns I could think of include

- How will the

Publisheraggregate all the responses that theConsumerscreated, the message can only be polled for x times before it expires or is pushed to a DLQ. Additionally messages only live for a short time, AWS SQS default retention is 4 days but can be configured between 1 minute and 14 days.- Potentially a database store is better or perhaps for a POC I could just log somewhere

- What happens if a

Consumercrashes while processing a request- SQS has a timeout visibility of a message once its polled for, so another

Consumercannot pick it up at the same time - I’ll need to consider how many times its polled for and also ensure its deleted once processed

- SQS has a timeout visibility of a message once its polled for, so another

- Why use queues at all?

- Supports a simpler message bus, a database will need to serialize the message to string

- Cheaper in the long run and a message in the queue is intended to be ephemeral (last a short time)

- This brings another concearn, how can I make sure these component events are atomic

- IE: the

Consumerpicks up some work to do and it successfully publishes the result - Potentially a queue is not the best way to aggregate the results, maybe this must go directly to a database store

- The Inbox/Outbox pattern solves this issue as using masstransit. Super helpful video here by Nick Chapsas

- IE: the

Miro Overview

I love a good Miro board to itterate on an idea! Here I structured high level based on the white board ideas.

WIP!